Monday, 30 May 2011

2011 National Geographic Bee Champion

Answer: Sagarmatha National Park

Google is proud to support National Geographic Bee, for the 3rd year in a row. National Geographic and Google share the same passion for inspiring and encouraging our future generation of leaders and innovators to learn about and explore the world around them. Being geographically literate and understanding the world is a vital skill for students of all ages. Technology, like Google Earth, has helped make the world a more accessible place and students need geographic skills to be prepared for a global future.

The first-place winner, Tine Valencic, won a $25,000 college scholarship, a lifetime membership in the National Geographic Society, and a trip to the Galápagos Islands. The second-place winner and recipient of a $15,000 college scholarship was Georgia's Nilai Sarda and third place and a $10,000 college scholarship went to Kansas' Stefan Petrović. The seven other finalists, who won $500, were Andrew Hull, of Alaska: Luke Hellum, of Arizona; Tuvya Bergson-Michelson, of California; Kevin Mi, of Indiana; Karthik Karnik, of Massachusetts; Alex Kimn, of South Dakota; and Anthony Cheng, of Utah.

Congratulations to Tine Valencic and to all of the students who participated in this year’s National Geographic Bee. We look forward to following all of you as you continue to explore the world and we expect to see some of you at Google after you have earned your degrees.

Sunday, 29 May 2011

Google Apps to Snail Mail

The days of the paperless office are not yet upon us, with physical mail delivery still popular for many types of communication including marketing information, financial documents and greetings cards. Over 5000 companies in the UK now use CFH Docmail to print, stuff, and deliver their mail, resulting in over 6 million documents being sent every month. By creating Docmail Connect for Google Apps, we at UK Google focused systems integrator, Appogee, have made it simple for Google Apps users to personalize, print and mail their Google documents..

As well as allowing users to send snail mail from the Google Apps menu, we made the code library for Docmail Connect for Google Apps available in project hosting. Other Google Apps Marketplace providers, such as CRM and ERP applications, can now benefit from being able to easily integrate their applications with Docmail services.

The Challenge

In order to implement the solution we needed to take the Docmail web services and create a Python interface to them. To achieve that we needed a simple Python to Web Services layer we could rely on which would allow us to build the bridge between Google App Engine and Docmail’s API, and then we needed to make the bridge work from Google Docs to the CFH Docmail service. The final challenge we faced was achieving a seamless experience for the user which meant focusing on performance each step of the way.The Solution (in 3 easy steps)

Step 1: Invoke a SOAP Web Service from App Engine using Python

- The HttpTransport class has a u2open method which uses python’s socket module to set the timeout. This is not allowed on App Engine, and the timeout setting can be set later using App Engines urlfetch module. The timeout line was removed in a new method:

def u2open_appengine(self, u2request): tm = self.options.timeout url = self.u2opener() # socket.setdefaulttimeout(tm) can't call this on app engine if self.u2ver() < 2.6: return url.open(u2request) else: return url.open(u2request, timeout=tm) transport.http.HttpTransport.u2open = u2open_appengine

- The SUDs library has an extensible cache class. The SUDs library provides various implementations of this, however we wanted to use the App Engine memcache for performance. To do this, we implemented a new class to use memcache, as shown below:

class MemCache(cache.Cache): def __init__(self, duration=3600): self.duration = duration self.client = memcache.Client() def get(self, id): return self.client.get(str(id)) def getf(self, id): return self.get(id) def put(self, id, object): self.client.set(str(id), object, self.duration) def putf(self, id, fp): self.put(id, fp) def purge(self, id): self.client.delete(str(id)) def clear(self): self.client.flush_all()This was then plugged into the SUDs client class by overriding the __init__ method. - We can now use the modified SUDs client class to make SOAP calls on App Engine! The full source code for this is available in project hosting.

Step 2: Google Docs to Docmail

CFH publish a full API to allow developers to integrate their applications with Docmail, a subset of which needed to be used by Appogee to create the interface for users to create mailings from Google Docs. Not every Docmail function is enabled in the wrapper, but the principles used can easily be extrapolated to any other function, and the key to it is getting a satisfactory link from Google Docs to Docmail.The Docmail API can accept documents in a variety of formats including doc, docx, PDF and RTF. In order to use the API, we would need to extract Google Docs content in one of these formats. The Google Docs API has support for exporting in doc, RTF and PDF. We experimented with each before settling on RTF, which, although large in file size, did work. However the Google App Engine urlfetch library has a 1MB request limit, so we were not able to send files larger than 1MB. We evaluated a number of workarounds such as cutting the files up into chunks and sending them separately, bouncing the files off another platform but our only option was to simply prevent files larger than 1 MB from being uploaded. To achieve this we used Ajax calls to check (compute) the file size in real time as they are selected and provide appropriate feedback to the user. The file size cut off is a configurable parameter, so if Google increase the limit we can adjust the app without redeploying the code.

Breaking this integration down, we used the Google Docs API together with the Docmail SOAP API, via our modified implementation of the SUDs library as described in step 1.

The Docmail API must be used in a logical order, to coincide with the wizard pages on the Docmail website. We can illustrate these steps in order:

- Create an instance of the Docmail client, which is an extension to the SUDs client class. The client contains the methods we need for further communication with the Docmail API:

docmail_client = client.Client(USERNAME, PASSWORD, SOURCE)

- Create / Retrieve a mailing. To create a new mailing, we create an instance of the Mailing class, and pass it into the create_mailing method:

mailing = client.Mailing(name='test mailing') mailing = docmail_client.create_mailing(mailing)

To retrieve an existing mailing, we need to know the mailing guid:

mailing = docmail_client.get_mailing('enter-your-mailing-guid') - Upload a template document. Since we are retrieving documents from Google Docs to be used as the template, we need to download it first. We do this using the Google Docs API:

docs_client = gdata.docs.client.DocsClient() # authenticate client using oauth (see google docs documentation for example code)

We now need to extract the document. There are various formats you can do this in, but we found by experimenting that RTF worked best, despite being the largest in file size.

file_content = docs_client.GetFileContent(uri=doc_url + '&exportFormat=rtf')

And finally upload the template file to docmail:

docmail_client.add_template_file(mailing.guid, file_content)

- Upload a Mailing List Spreadsheet. This is similar code to uploading a template:

docs_client = gdata.docs.client.DocsClient() file_content = docs_client.GetFileContent(uri=doc_url + '&exportFormat=csv') docmail_client.add_mailing_list_file(mailing.guid, file_content)

- Submit the mailing for processing. The mailing needs to be submitted for processing. Once this has been done, a proof is available for download.

docmail_client.process_mailing(mailing.guid, False, True)

- Finally, we approve and pay for the mailing:

docmail_client.process_mailing(mailing.guid, True, False)

Step 3 - Performance

When creating a system like Docmail Connect for Google Apps, overall acceptability of the system will be driven as much by performance as by functionality, so we paid particular attention to the key components driving this.We were conscious that some Google Apps users may have a lot of Google documents, so presenting all of them for selection to a user wasn’t an option. Instead, we load 100 at a time (the default), and send them to the browser using XHR calls so it all happens without the user having to do anything and works quite fast - the user can now select from 1000s of documents within a few seconds.

Next we had to address the connection to the Docmail API, where care must be taken to achieve acceptable throughput. Queries to the API had to be minimized and we didn't want the page submits to be slow - the user should be able to go from one page to the next as quickly as possible. To achieve this, we used the App Engine Task Queue. When a user submits a page which requires communication with the Docmail API we fire off a Task Queue to do the work for us, and then simultaneously navigate the user to the next page in the process. This means that the server is working while the user progresses the workflow. This also means handling timeout errors is easier, as the Task Queue can catch the errors and reinstate another task. But it requires some extra planning as some tasks will not complete until others finish, and process checking needs to ensure new tasks only get fired off when the appropriate time has come.

We hope the wrapper, together with the key learnings written up here will encourage others to have a go at wiring their applications to the Docmail delivery services.

Wednesday, 25 May 2011

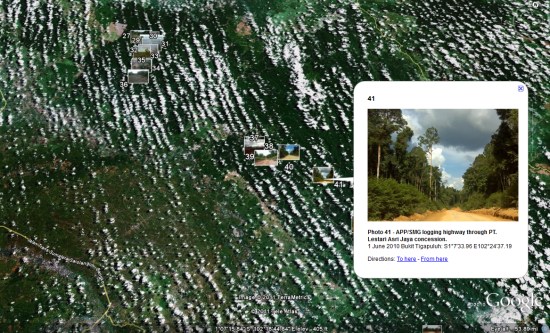

Google Earth: Saving the Tigers of Sumatra

A related story has just come out about an area of Sumatra that is one of the last homes for the Indonesian Tiger, and it's being destroyed by paper companies. The WWF has put together a variety of KMZ files to show the destruction in the area, with features such as geo-referenced photos as seen here:

To learn more simply head over to their site and scroll down below the Google Earth screenshot to find the various KMZ files, or watch the video below for an overview of what's going on in Sumatra:

Tuesday, 24 May 2011

Free data with Reference Map

In order to generate a map with a region of your choice just follow these simple steps:

1. Download list of available regions from the above table.The data is a module of a bigger application I am slowly building over time. So far it includes a reference map, some popular administrative boundary data for Australia and a separate component that is closely linked - user sign up and PDF report download module.

2. Look up naming convention for required region(s).

3. List all required regions in the URL, following this pattern (this example shows how to include one of each region, as listed in the above table)

http://www.aus-emaps.com/svs/ref/map.php?

kmzl= 2000,Sydney_NSW,Sydney__C_,Sydney,Sydney&

svs=2,4,5,6,7

[service codes svs: 2-postcodes, 4-suburbs, 5-LGA, 6-CED, 7-ATR]

[Additional setup options are available, please see full instructions on aus-emaps.com free widgets page]

4. Copy URL to your browser/web page to display the map.

5. If you are interested in adding extra functionality to your site, see also this example of how to script access to the service and embed a map into a web page.

Paella for Google Summer of Code Mentors

The dinner brought together both new and experienced mentors from 29 of this year’s organizations to chat about their expectations for the program and their students. The casual meetup was also an opportunity for the mentors to share their questions and concerns with one another and to share advice on successfully fulfilling their roles as mentors.

Monday, 23 May 2011

Sunday, 22 May 2011

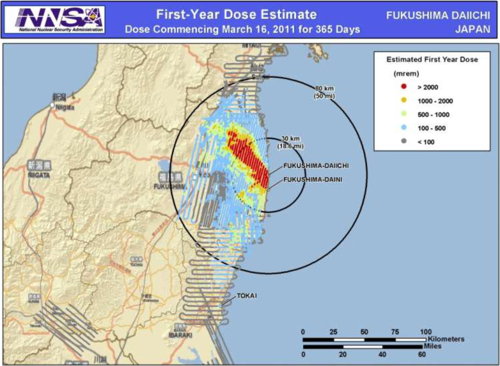

Long-Term Radiation Exposure at Fukushima on the map

The U.S. National Nuclear Safety Administration has produced a map (as part of a presentation) showing the estimated first-year, long-term radiation dose in and around the Fukushima nuclear plant. “In the red swath of land northwest of the plant where weather deposited a lot of fallout, potential exposures exceed 2000 millirems/year. That is the level at which the U.S. Department of Homeland Security would consider relocating the public,” says ScienceInsider. “Although 2000 millirems over 1 year isn’t an immediate health threat, it’s enough to cause roughly one extra cancer case in 500 young adults and one case in 100 1-year-olds.”

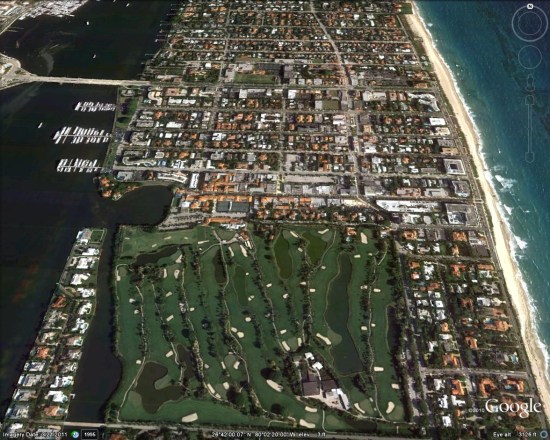

New Google Earth Imagery - May 18

As is usually the case, you can use Google Maps to determine for sure whether or not a specific area is fresh. This new imagery isn't in Google Maps yet, so you can compare Earth vs. Maps to see what's new; the fresh imagery is already in Google Earth, but the old imagery is still in Google Maps. If you compare the two side-by-side and they're not identical, that means that you've found a freshly updated area in Google Earth!

[UPDATED - 18-May, 9:40pm EST]

- Australia: Helensburgh -- thanks 'Pete'

- China: Lushun, Xiaopingdao -- thanks 'Munden'

- Greece: Khania -- thanks 'Munden'

- Hungary: Batonyterenya, Paszto -- thanks 'eHOG'

- Iceland: Selfoss -- thanks 'Noel'

- India: Pallimukku -- thanks 'Munden'

- Japan: Kamijima, Sanuki, Minamiawaji -- thanks 'Munden'

- Norway: Various areas -- thanks 'Munden'

- Portugal: Castelo Branco -- thanks 'lain'

- Romania: Various areas -- thanks 'cristi'

- Russia: Severodvinsk -- thanks 'Munden'

- United States: Alaska (Anchorage), Florida (Broward, Miami, Palm Beach), Michigan (Grand Rapids), New York (Buffalo), North Carolina (Hickory), Texas (Dallas), Virginia (Lynchburg) -- thanks 'Brian', 'ChrisK', 'GT", 'Munden' and 'toyle'

Saturday, 21 May 2011

ADK at Maker Faire

Following up on yesterday’s ADK post, we should take this opportunity to note that the Faire has chased a lot of ADK-related activity out of the woodwork. The level of traction is pretty surprising giving that this stuff only decloaked last week.

Convenience Library

First, there’s a new open-source project called Easy Peripheral Controller. This is a bunch of convenience/abstraction code; its goal is to help n00bs make their first robot or hardware project with Android. It takes care of lots of the mysteries of microcontroller wrangling in general and Arduino in particular.Bits and Pieces from Googlers at the Faire

Most of these are 20%-project output.Project Tricorder: Using the ADK and Android to build a platform to support making education about data collection and scientific process more interesting.

Disco Droid: Modified a bugdroid with servos and the ADK to show off some Android dance moves.

Music Beta, by Google: Android + ADK + cool box with lights for a Music Beta demo.

Optical Networking: Optical network port connected to the ADK.

Interactive Game: Uses ultrasonic sensors and ADK to control an Android game.

Robot Arm: Phone controlling robot arm for kids to play with.

Bugdroids: Balancing Bugdroids running around streaming music from an Android phone.

The Boards

We gave away an ADK hardware dev kit sample to several hundred people at Google I/O, with the idea of showing manufacturers what kind of thing might be useful. This seems to have worked better than we’d expected; we know of no less than seven makers working on Android Accessory Development Kits. Most of these are still in “Coming Soon” mode, but you’ll probably be able to get your hands on some at the Faire.- RT Technology's board is pretty much identical to the kit we handed out at I/O.

- SparkFun has one in the works, coming soon.

- Also, SparkFun’s existing IOIO product will be getting ADK-compatible firmware.

- Arduino themselves also have an ADK bun in the oven.

- Seeedstudio’s Seeeeduino Main Board.

- 3D Robotics’ PhoneDrone Board.

- Microchip’s Accessory Development Starter Kit.

Friday, 20 May 2011

Chromebook: A new kind of computer

A little less than two years ago we set out to make computers much better. Today, we’re announcing the first Chromebooks from our partners, Samsung and Acer. These are not typical notebooks. With a Chromebook you won’t wait minutes for your computer to boot and browser to start. You’ll be reading your email in seconds. Thanks to automatic updates the software on your Chromebook will get faster over time. Your apps, games, photos, music, movies and documents will be accessible wherever you are and you won't need to worry about losing your computer or forgetting to back up files. Chromebooks will last a day of use on a single charge, so you don’t need to carry a power cord everywhere. And with optional 3G, just like your phone, you’ll have the web when you need it. Chromebooks have many layers of security built in so there is no anti-virus software to buy and maintain. Even more importantly, you won't spend hours fighting your computer to set it up and keep it up to date.

At the core of each Chromebook is the Chrome web browser. The web has millions of applications and billions of users. Trying a new application or sharing it with friends is as easy as clicking a link. A world of information can be searched instantly and developers can embed and mash-up applications to create new products and services. The web is on just about every computing device made, from phones to TVs, and has the broadest reach of any platform. With HTML5 and other open standards, web applications will soon be able to do anything traditional applications can do, and more.Chromebooks will be available online June 15 in the U.S., U.K., France, Germany, Netherlands, Italy and Spain. More countries will follow in the coming months. In the U.S., Chromebooks will be available from Amazon and Best Buy and internationally from leading retailers.

Even with dedicated IT departments, businesses and schools struggle with the same complex, costly and insecure computers as the rest of us. To address this, we’re also announcing Chromebooks for Business and Education. This service from Google includes Chromebooks and a cloud management console to remotely administer and manage users, devices, applications and policies. Also included is enterprise-level support, device warranties and replacements as well as regular hardware refreshes. Monthly subscriptions will start at $28/user for businesses and $20/user for schools.

There are over 160 million active users of Chrome today. Chromebooks bring you all of Chrome's speed, simplicity and security without the headaches of operating systems designed 20 to 30 years ago. We're very proud of what the Chrome team along with our partners have built, and with seamless updates, it will just keep getting better.

For more details please visit www.google.com/chromebook.

Wednesday, 18 May 2011

New Zealand 1:50,000 Topo Maps

New Zealand Topo Map is an interactive topographic map of New Zealand using the official LINZ's 1:50,000 / Topo50 and 1:250,000 / Topo250 maps.

http://www.topomap.co.nz/NZTopoMap/9432/Vampire/Westland

Lord of the Rings (and The Hobbit 2012) Fans will be interested in

http://www.topomap.co.nz/NZTopoMap/7770/Lindis%20Pass/Otago

Tuesday, 17 May 2011

The Go programming language is coming to Google App Engine

Go is an open source language, initially designed at Google, that was released in November 2009 and has seen significant development since launch. It is a statically typed, compiled language with a dynamic and lightweight feel. It’s also an interesting new option for App Engine because Go apps will be compiled to native code, making Go a good choice for more CPU-intensive tasks. Plus the garbage collection and concurrency features of the language, combined with excellent libraries, make it a great fit for web apps.

As of today, the App Engine SDK for Go is available for download, and we will soon enable deployment of Go apps into the App Engine infrastructure. If you’re interested in starting early, sign up to be first through the door when we open it up to early testers. Once it proves solid, we’ll open it up to everyone, although it will remain an experimental App Engine feature for a while.

You don’t need an existing Go installation; the SDK is fully self-contained, so it’s very simple to get a local web app up and running. The SDK is a really easy way to start playing with Go.

Monday, 16 May 2011

Bin Laden's Compound

Update, 6 PM:

Defense department maps and imagery of the Bin Laden compound has been released; see galleries here and here, among others. See also the New York Times’s interactive map. And then there’s this recent satellite image of Abbottabad from Digital Globe —

— which Ogle Earth’s Stefan Geens has made into a Google Earth overlay.

Wednesday, 11 May 2011

Places everybody, let the show begin...

This represents the culmination of the Developer Preview launched last year, shortly after we introduced the Places API at Google I/O 2010. Interest in the Preview was overwhelming and we have been amazed by the innovative use cases suggested for the API. The developers we worked with provided a great deal of extremely valuable feedback on all aspects of the API, including features, performance, usability, and terms of use.

We’ve been working hard to implement the recommendations we received during the Preview. As a result the service launching today includes many new features, most of which are a direct result of this developer feedback:

- A globally consistent type scheme for Places, spanning more than 100 types such as bar, restaurant, and lodging

- Name and type based query support

- A significantly simpler key based authentication scheme

- Global coverage across every country covered by Google Maps

- Google APIs Console integration, which provides group ownership of projects, key management, and usage monitoring

- Instant reflection of new Places submitted by an app in subsequent searches made by that app, with new Places shared with all apps after moderation

- Real time reranking of search results based on current check-in activity, so that Places that are currently popular are automatically ranked higher in searches by your app

Both the Places API Search service and the Places API Autocomplete service are offered as XML/JSON REST based web services. These APIs are currently both in Google Code Labs, which means they are not yet included in Maps API Premier. However we are working to graduate the APIs from Code Labs in the near future, at which point the service will also be offered to Maps API Premier developers.

To get started, please follow the instructions in the documentation for obtaining an APIs console key, and enabling the Places API on that key. If you have joined us at Google I/O this year, come along to our session on "Building Location Based apps using Google APIs" at 3pm on Wednesday, in which our Tech Lead, Marcelo Camelo, will be diving into the API in more detail.

In addition to these web services we are also launching a new places library in the Google Maps API which includes:

- A

PlacesServicethat allows Places API queries to be issued by Maps API applications - A class that can attach

Autocompletebehaviour to any text field on a web page, with the predicted places biased to a specific location or map viewport

PlacesService to display Places on a map in response to changes in the map view port. An individual Place can also be mapped using the Autocomplete enabled search field:If you would like to provide any feedback about the Places API or Maps API, or you have suggestions for improvements or new features, please let us know using the Maps API Issue Tracker. You can also discuss our APIs using the Maps API Developer Forums.

We’re very excited to make all of these great Places services available to all of our Maps API developers today. We know many of you have been eagerly awaiting access to the Places API, and we appreciate your patience. Places bridge the divide between the way that maps and computers represent the world, and the way that people relate to it. We believe that the launch of the Places API will spark a whole new wave of innovative location based application development, both on mobile and desktop, and we can’t wait to see how it is used.

Tuesday, 10 May 2011

Using the power of mapping to support South Sudan

South Sudan is a large but under-mapped region, and there are very few high-quality maps that display essential features like roads, hospitals and schools. Up-to-date maps are particularly important to humanitarian aid groups, as they help responders target their efforts and mobilize their resources of equipment, personnel and supplies. More generally, maps are an important foundation for the development of the infrastructure and economy of the country and region.

The Map Maker community—a wide-ranging group of volunteers that help build more comprehensive maps of the world using our online mapping tool, Google Map Maker—has been contributing to the mapping effort for Sudan since the referendum on January 9. To aid their work, we’ve published updated satellite imagery of the region, covering 125,000 square kilometers and 40 percent of the U.N.’s priority areas, to Google Earth and Maps.

The goal of last week’s event was to engage and train members of the Sudanese diaspora in the United States, and others who have lived and worked in the region, to use Google Map Maker so they could contribute their local knowledge of the region to the ongoing mapping effort, particularly in the area of social infrastructure. Our hope is that this event and others like it will help build a self-sufficient mapping community that will contribute their local expertise and remain engaged in Sudan over time.

We were inspired by the group’s enthusiasm. One attendee told us: “I used to live in this small village that before today did not exist on any maps that I know of...a place unknown to the world. Now I can show to my kids, my friends, my community, where I used to live and better tell the story of my people.”

The group worked together to make several hundred edits to the map of Sudan in four hours. As those edits are approved, they’ll appear live in Google Maps, available for all the world to see. But this wasn’t just a one-day undertaking—attendees will now return to their home communities armed with new tools and ready to teach their friends and family how to join the effort. We look forward to seeing the Southern Sudanese mapping community grow and flourish.

Royal Wedding and the Cloud

Monday, 9 May 2011

OpenID Attribute Exchange Security

The researchers determined that the affected sites were not confirming that certain information passed through AX was properly signed. If the site was only using AX to receive information like the user’s self-asserted gender, then this issue would be minor. However, if it was being used to receive security-sensitive information that only the identity provider should assert, then the consequences could be worse.

A specific scenario identified involves a website that accepts an unsigned AX attribute for email address, and then logs the user in to a local account on that website associated with the email address. When a website asks Google’s OpenID provider (IDP) for someone’s email address, we always sign it in a way that cannot be replaced by an attacker. However, many websites do not ask for email addresses for privacy reasons among others, and so it is a perfectly legitimate response for the IDP to not include this attribute by default. An attacker could forge an OpenID request that doesn’t ask for the user’s email address, and then insert an unsigned email address into the IDPs response. If the attacker relays this response to a website that doesn’t notice that this attribute is unsigned, the website may be tricked into logging the attacker in to any local account.

The researchers contacted the primary websites they identified with this vulnerability, and those sites have already deployed a fix. Similarly, Google and other OpenID Foundation members have worked to identify many other websites that were impacted and have helped them deploy a fix. There are no known cases of this attack being exploited at this point in time.

A detailed explanation of the use of claimed IDs and email addresses can be found in Google’s OpenID best practices.

Google would like to thank security researchers Rui Wang, Shuo Chen and XiaoFeng Wang for reporting their findings. The OpenID Foundation has also done a similar blog post on the issue.

Action Required:

- If you are an OpenID relying party, then you should read the Suggested Fix section below to see if this vulnerability might apply to you, and what to do about it.

- If you are an application developer that uses OpenID relying party services from someone else, like your container provider or some network intermediary, please read the Suggested Fix section to see if your service is listed there. Otherwise, you should check with that entity to make sure they are not susceptible to this issue.

As a first step, we recommend modifying vulnerable relying parties to accept AX attribute values only when signed, irrespective of how those attributes might get used.

During our investigation we confirmed that apps using the OpenID4Java library, with or without the Step2 wrapper, are prone to accepting unsigned AX attributes. OpenID4Java has been patched with the fix in version 0.9.6.662 (19th April, 2011).

Kay Framework was known to be vulnerable and has since been patched. Users should upgrade to version 1.0.2 or later. Note that Google App Engine developers that use its built-in OpenID support do not need to do anything.

Other libraries may have the same issue, although we do not believe that the default usage of OpenID services and libraries from Janrain, Ping Identity and DotNetOpenAuth are susceptible to this attack. However, the defaults may be overridden and you should double check your code for that.

We also suggest reviewing your usage of email addresses retrieved via OpenID to ensure that adequate safeguards are in place. A detailed explanation of the use of claimed IDs and email addresses can be found in our OpenID best practices published for Apps Marketplace developers that also apply to relying parties in general.

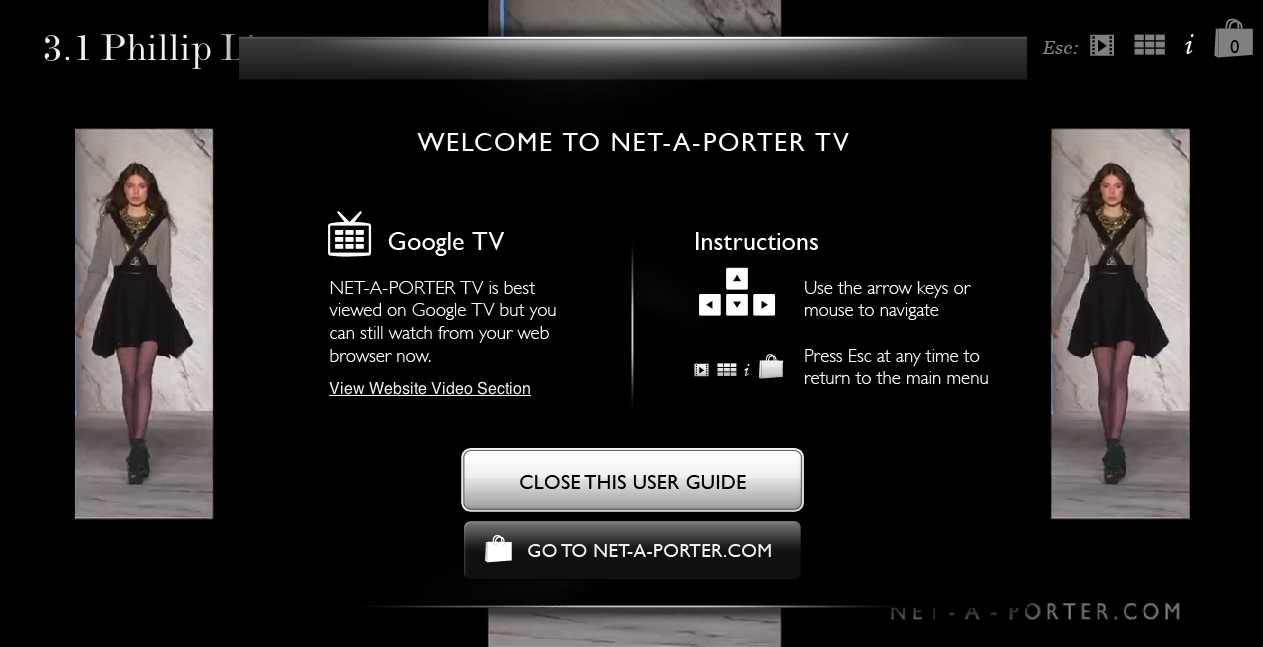

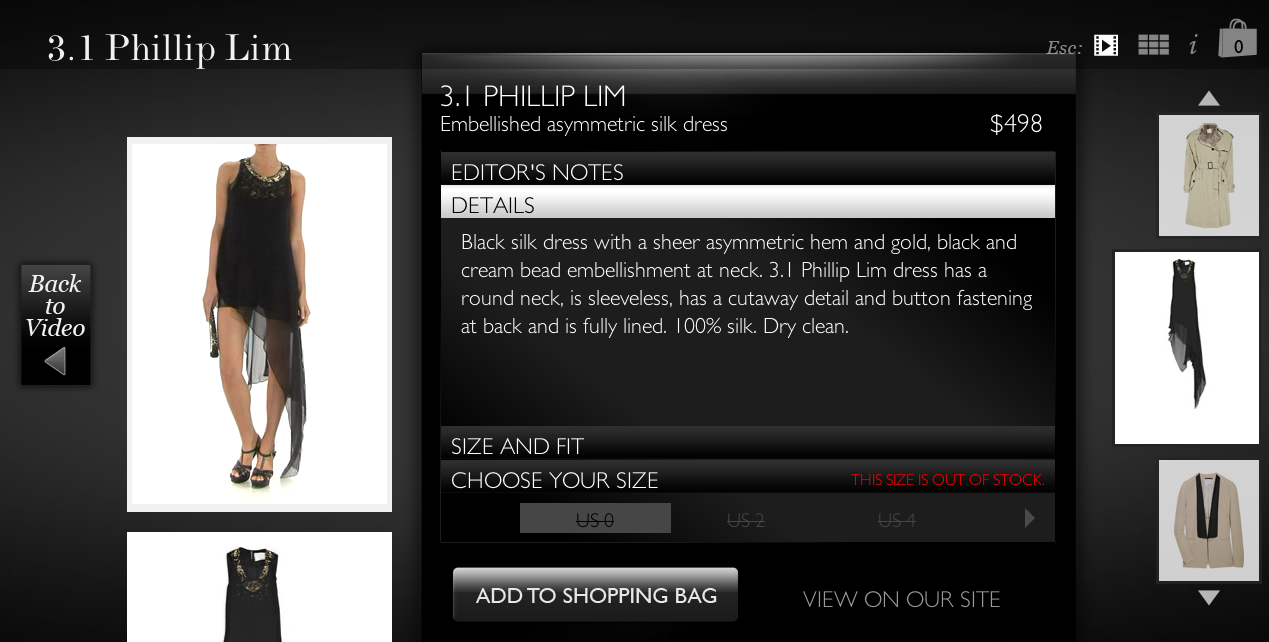

NET-A-PORTER.COM - fashion and commerce to Google TV

NET-A-PORTER TV users can watch a variety of videos featuring designer runway shows, interviews, and additional feature clips. Users can also browse their collection of luxury name brand items from premium designers like Phillip Lim, Alexander Wang, Michael Kors, Erdem, and others. When you watch runway videos, a vertical product carousel is displayed showing items matching the runway model's outfit. The product carousel dynamically updates and stays in sync with the video. While browsing, you can select to view more details about any specific item and add it to your shopping cart. When you’re done, you can complete your transaction on the original site.

Contextually matching merchandise items based on video playback is not only compelling to users but also makes online shopping a bit more more interactive and enjoyable. This adds a nice touch which facilitates a low-pressure and casual experience, great for the living room context.

The NET-A-PORTER Labs team split the web app into two major sections, video and user interface. For video, they used a lightweight JavaScript MVC wrapper around a chrome-less Brightcove player. The model fetches video data, the view manages video playback, and the controller ties the two together. For user interface, they relied on jQuery to navigate the DOM and make their app keyboard navigable. The team created a custom event handling system which captures key events and calls handler functions corresponding to a specific set of elements. They also used CSS3 transitions to animate vertical scrolling of the product carousel.

To take a deeper technical dive into the inner workings of NET-A-PORTER TV, be sure to check out their article written by James Christian and Scott Seaward from the NET-A-PORTER Labs team. This article describes everything from the initial concept to interface design and even to video production.

We thank the team at NET-A-PORTER.COM for their continued dedication, support, and persistence in improving their Google TV web app. We look forward to seeing what they come up with next.

Sunday, 8 May 2011

CiviCRM – Creating a Custom Report

I’m quite familiar with Drupal (the backend framework on which CiviCRM sits), but I hadn’t got any particular experience with CiviCRM itself. I have to say that, out-of-the-box, Drupal + CiviCRM is an amazingly powerful combination, and it allowed me to build a prototype demonstrating most of the major functions (including importing their existing dataset from a variety of messy Excel spreadsheets) using the built-in options in just a few weeks. However (and, in accordance with the Pareto principle), the last 20% or so of their requirements have taken a little bit longer to fulfil…

Many of these requirements involve developing custom reports. Or, to use specific CiviCRM-speak, developing reports based on custom report templates – i.e. a report based on a customised set of data (customising an existing report by altering say, display fields, filtering or grouping options that are already exposed in the underlying template is easy).

The process for creating a customised report template – a whole new type of report – is as follows:

1. Firstly, ensure that you have configured CiviCRM directory paths for both Custom Templates and Custom PHP. These can be set at Administer –> Configure –> Global Settings –> Directories. I set my paths to point at /sites/default/files/civicrm/custom_templates and /sites/default/files/civicrm/custom_php , respectively.

2. Within the specified custom directories, I then created a directory structure that matched the location of the CiviCRM built-in reports in the modulescivicrm directory. So, for example, I created a directory structure that looked like:

sitesdefaultfilescivicrmcustom_templatesCRMReportForm

sitesdefaultfilescivicrmcustom_phpCRMReportForm

3. The next step is to create the template file. To create a new standard report, the only thing this template needs to contain is a reference to the standard CiviCRM report form, as follows:

{include file="CRM/Report/Form.tpl"}That’s it. Save the file in the sitesdefaultfilescivicrmcustom_templatesCRMReportForm directory with a suitable name (the report I’m creating in this case is called TargetActivity.tpl).4. Next, create the PHP file. This file contains a class that extends the default CRM_Report_Form class, and specifies the query that should be run to populate the report, the columns to display, group by, and sort, for example. The report I’m creating in this case is a target activity report – in other words it shows a list of recent activity (phones calls, emails, letters etc.) together with the target of that activity – who the person being contacted was (the built-in CiviCRM report focusses on the source contact – the person who initiated the phone call, not the target contact). The PHP file I used for this custom report was based on the default Activity.php file, modified as follows:

enableComponents );

$this->_columns = array(

'civicrm_contact' =>

array( 'dao' => 'CRM_Contact_DAO_Contact',

'fields' =>

array(

'contact_target' =>

array( 'name' => 'display_name' ,

'title' => ts( 'Target Contact Name' ),

'alias' => 'civicrm_contact_target',

'default' => true,

'required' => true, ),

'contact_source' =>

array( 'name' => 'display_name' ,

'title' => ts( 'Source Contact Name' ),

'alias' => 'contact_civireport',

'no_repeat' => true ),

),

'filters' =>

array( 'contact_source' =>

array('name' => 'sort_name' ,

'alias' => 'contact_civireport',

'title' => ts( 'Source Contact Name' ),

'operator' => 'like',

'type' => CRM_Report_Form::OP_STRING ),

'contact_target' =>

array( 'name' => 'sort_name' ,

'alias' => 'civicrm_contact_target',

'title' => ts( 'Target Contact Name' ),

'operator' => 'like',

'type' => CRM_Report_Form::OP_STRING ) ),

'grouping' => 'contact-fields',

),

'civicrm_email' =>

array( 'dao' => 'CRM_Core_DAO_Email',

'fields' =>

array(

'contact_target_email' =>

array( 'name' => 'email' ,

'title' => ts( 'Target Contact Email' ),

'alias' => 'civicrm_email_target', ),

),

),

'civicrm_activity' =>

array( 'dao' => 'CRM_Activity_DAO_Activity',

'fields' =>

array( 'id' =>

array( 'no_display' => true,

'required' => true

),

'activity_type_id' =>

array( 'title' => ts( 'Activity Type' ),

'default' => true,

'type' => CRM_Utils_Type::T_STRING

),

'activity_subject' =>

array( 'title' => ts('Subject'),

'default' => true,

),

'source_contact_id' =>

array( 'no_display' => true ,

'required' => true , ),

'activity_date_time'=>

array( 'title' => ts( 'Activity Date'),

'default' => true ),

'status_id' =>

array( 'title' => ts( 'Activity Status' ),

'default' => true ,

'type' => CRM_Utils_Type::T_STRING ), ),

'filters' =>

array( 'activity_date_time' =>

array( 'default' => 'this.month',

'operatorType' => CRM_Report_Form::OP_DATE),

'activity_subject' =>

array( 'title' => ts( 'Activity Subject' ) ),

'activity_type_id' =>

array( 'title' => ts( 'Activity Type' ),

'operatorType' => CRM_Report_Form::OP_MULTISELECT,

'options' => CRM_Core_PseudoConstant::activityType( true, false, false, 'label', true ), ),

'status_id' =>

array( 'title' => ts( 'Activity Status' ),

'operatorType' => CRM_Report_Form::OP_MULTISELECT,

'options' => CRM_Core_PseudoConstant::activityStatus(), ),

),

'group_bys' =>

array( 'source_contact_id' =>

array('title' => ts( 'Source Contact' ),

'default' => true ),

'activity_date_time' =>

array( 'title' => ts( 'Activity Date' ) ),

'activity_type_id' =>

array( 'title' => ts( 'Activity Type' ) ),

),

'grouping' => 'activity-fields',

'alias' => 'activity'

),

'civicrm_activity_assignment' =>

array( 'dao' => 'CRM_Activity_DAO_ActivityAssignment',

'fields' =>

array(

'assignee_contact_id' =>

array( 'no_display' => true,

'required' => true ), ),

'alias' => 'activity_assignment'

),

'civicrm_activity_target' =>

array( 'dao' => 'CRM_Activity_DAO_ActivityTarget',

'fields' =>

array(

'target_contact_id' =>

array( 'no_display' => true,

'required' => true ), ),

'alias' => 'activity_target'

),

'civicrm_case_activity' =>

array( 'dao' => 'CRM_Case_DAO_CaseActivity',

'fields' =>

array(

'case_id' =>

array( 'name' => 'case_id',

'no_display' => true,

'required' => true,

),),

'alias' => 'case_activity'

),

);

parent::__construct( );

}

function select( ) {

$select = array( );

$this->_columnHeaders = array( );

foreach ( $this->_columns as $tableName => $table ) {

if ( array_key_exists('fields', $table) ) {

foreach ( $table['fields'] as $fieldName => $field ) {

if ( CRM_Utils_Array::value( 'required', $field ) ||

CRM_Utils_Array::value( $fieldName, $this->_params['fields'] ) ) {

if ( $tableName == 'civicrm_email' ) {

$this->_emailField = true;

}

$select[] = "{$field['dbAlias']} as {$tableName}_{$fieldName}";

$this->_columnHeaders["{$tableName}_{$fieldName}"]['type'] = CRM_Utils_Array::value( 'type', $field );

$this->_columnHeaders["{$tableName}_{$fieldName}"]['title'] = CRM_Utils_Array::value( 'title', $field );

$this->_columnHeaders["{$tableName}_{$fieldName}"]['no_display'] = CRM_Utils_Array::value( 'no_display', $field );

}

}

}

}

$this->_select = "SELECT " . implode( ', ', $select ) . " ";

}

function from( ) {

$this->_from = "

FROM civicrm_activity {$this->_aliases['civicrm_activity']}

LEFT JOIN civicrm_activity_target {$this->_aliases['civicrm_activity_target']}

ON {$this->_aliases['civicrm_activity']}.id = {$this->_aliases['civicrm_activity_target']}.activity_id

LEFT JOIN civicrm_activity_assignment {$this->_aliases['civicrm_activity_assignment']}

ON {$this->_aliases['civicrm_activity']}.id = {$this->_aliases['civicrm_activity_assignment']}.activity_id

LEFT JOIN civicrm_contact contact_civireport

ON {$this->_aliases['civicrm_activity']}.source_contact_id = contact_civireport.id

LEFT JOIN civicrm_contact civicrm_contact_target

ON {$this->_aliases['civicrm_activity_target']}.target_contact_id = civicrm_contact_target.id

LEFT JOIN civicrm_contact civicrm_contact_assignee

ON {$this->_aliases['civicrm_activity_assignment']}.assignee_contact_id = civicrm_contact_assignee.id

{$this->_aclFrom}

LEFT JOIN civicrm_option_value

ON ( {$this->_aliases['civicrm_activity']}.activity_type_id = civicrm_option_value.value )

LEFT JOIN civicrm_option_group

ON civicrm_option_group.id = civicrm_option_value.option_group_id

LEFT JOIN civicrm_case_activity case_activity_civireport

ON case_activity_civireport.activity_id = {$this->_aliases['civicrm_activity']}.id

LEFT JOIN civicrm_case

ON case_activity_civireport.case_id = civicrm_case.id

LEFT JOIN civicrm_case_contact

ON civicrm_case_contact.case_id = civicrm_case.id ";

if ( $this->_emailField ) {

$this->_from .= "

LEFT JOIN civicrm_email civicrm_email_source

ON {$this->_aliases['civicrm_activity']}.source_contact_id = civicrm_email_source.contact_id AND

civicrm_email_source.is_primary = 1

LEFT JOIN civicrm_email civicrm_email_target

ON {$this->_aliases['civicrm_activity_target']}.target_contact_id = civicrm_email_target.contact_id AND

civicrm_email_target.is_primary = 1

LEFT JOIN civicrm_email civicrm_email_assignee

ON {$this->_aliases['civicrm_activity_assignment']}.assignee_contact_id = civicrm_email_assignee.contact_id AND

civicrm_email_assignee.is_primary = 1 ";

}

}

function where( ) {

$this->_where = " WHERE civicrm_option_group.name = 'activity_type' AND

{$this->_aliases['civicrm_activity']}.is_test = 0 AND

{$this->_aliases['civicrm_activity']}.is_deleted = 0 AND

{$this->_aliases['civicrm_activity']}.is_current_revision = 1";

$clauses = array( );

foreach ( $this->_columns as $tableName => $table ) {

if ( array_key_exists('filters', $table) ) {

foreach ( $table['filters'] as $fieldName => $field ) {

$clause = null;

if ( CRM_Utils_Array::value( 'type', $field ) & CRM_Utils_Type::T_DATE ) {

$relative = CRM_Utils_Array::value( "{$fieldName}_relative", $this->_params );

$from = CRM_Utils_Array::value( "{$fieldName}_from" , $this->_params );

$to = CRM_Utils_Array::value( "{$fieldName}_to" , $this->_params );

$clause = $this->dateClause( $field['name'], $relative, $from, $to, $field['type'] );

} else {

$op = CRM_Utils_Array::value( "{$fieldName}_op", $this->_params );

if ( $op ) {

$clause =

$this->whereClause( $field,

$op,

CRM_Utils_Array::value( "{$fieldName}_value", $this->_params ),

CRM_Utils_Array::value( "{$fieldName}_min", $this->_params ),

CRM_Utils_Array::value( "{$fieldName}_max", $this->_params ) );

}

}

if ( ! empty( $clause ) ) {

$clauses[] = $clause;

}

}

}

}

if ( empty( $clauses ) ) {

$this->_where .= " ";

} else {

$this->_where .= " AND " . implode( ' AND ', $clauses );

}

if ( $this->_aclWhere ) {

$this->_where .= " AND {$this->_aclWhere} ";

}

}

function groupBy( ) {

$this->_groupBy = array();

if ( ! empty($this->_params['group_bys']) ) {

foreach ( $this->_columns as $tableName => $table ) {

if ( ! empty($table['group_bys']) ) {

foreach ( $table['group_bys'] as $fieldName => $field ) {

if ( CRM_Utils_Array::value( $fieldName, $this->_params['group_bys'] ) ) {

$this->_groupBy[] = $field['dbAlias'];

}

}

}

}

}

$this->_groupBy[] = "{$this->_aliases['civicrm_activity']}.id";

$this->_groupBy = "GROUP BY " . implode( ', ', $this->_groupBy ) . " ";

}

function buildACLClause( $tableAlias ) {

//override for ACL( Since Cotact may be source

//contact/assignee or target also it may be null )

require_once 'CRM/Core/Permission.php';

require_once 'CRM/Contact/BAO/Contact/Permission.php';

if ( CRM_Core_Permission::check( 'view all contacts' ) ) {

$this->_aclFrom = $this->_aclWhere = null;

return;

}

$session = CRM_Core_Session::singleton( );

$contactID = $session->get( 'userID' );

if ( ! $contactID ) {

$contactID = 0;

}

$contactID = CRM_Utils_Type::escape( $contactID, 'Integer' );

CRM_Contact_BAO_Contact_Permission::cache( $contactID );

$clauses = array();

foreach( $tableAlias as $k => $alias ) {

$clauses[] = " INNER JOIN civicrm_acl_contact_cache aclContactCache_{$k} ON ( {$alias}.id = aclContactCache_{$k}.contact_id OR {$alias}.id IS NULL ) AND aclContactCache_{$k}.user_id = $contactID ";

}

$this->_aclFrom = implode(" ", $clauses );

$this->_aclWhere = null;

}

function postProcess( ) {

$this->buildACLClause( array( 'contact_civireport' , 'civicrm_contact_target', 'civicrm_contact_assignee' ) );

parent::postProcess();

}

function alterDisplay( &$rows ) {

// custom code to alter rows

$entryFound = false;

$activityType = CRM_Core_PseudoConstant::activityType( true, true, false, 'label', true );

$activityStatus = CRM_Core_PseudoConstant::activityStatus();

$viewLinks = false;

require_once 'CRM/Core/Permission.php';

if ( CRM_Core_Permission::check( 'access CiviCRM' ) ) {

$viewLinks = true;

$onHover = ts('View Contact Summary for this Contact');

$onHoverAct = ts('View Activity Record');

}

foreach ( $rows as $rowNum => $row ) {

if ( array_key_exists('civicrm_contact_contact_source', $row ) ) {

if ( $value = $row['civicrm_contact_source_contact_id'] ) {

if ( $viewLinks ) {

$url = CRM_Utils_System::url( "civicrm/contact/view" ,

'reset=1&cid=' . $value ,

$this->_absoluteUrl );

$rows[$rowNum]['civicrm_contact_contact_source_link' ] = $url;

$rows[$rowNum]['civicrm_contact_contact_source_hover'] = $onHover;

}

$entryFound = true;

}

}

if ( array_key_exists( 'civicrm_contact_contact_assignee', $row ) &&

$row['civicrm_activity_assignment_assignee_contact_id'] ) {

$assignee = array( );

//retrieve all contact assignees and build list with links

require_once 'CRM/Activity/BAO/ActivityAssignment.php';

$activity_assignment_ids = CRM_Activity_BAO_ActivityAssignment::getAssigneeNames( $row['civicrm_activity_id'], false, true );

foreach ( $activity_assignment_ids as $cid => $assignee_name ) {

if ( $viewLinks ) {

$url = CRM_Utils_System::url( "civicrm/contact/view", 'reset=1&cid=' . $cid, $this->_absoluteUrl );

$assignee[] = ''.$assignee_name.'';

} else {

$assignee[] = $assignee_name;

}

}

$rows[$rowNum]['civicrm_contact_contact_assignee'] = implode( '; ', $assignee );

$entryFound = true;

}

if ( array_key_exists('civicrm_activity_activity_type_id', $row ) ) {

if ( $value = $row['civicrm_activity_activity_type_id'] ) {

$rows[$rowNum]['civicrm_activity_activity_type_id'] = $activityType[$value];

if ( $viewLinks ) {

// case activities get a special view link

if ( $rows[$rowNum]['civicrm_case_activity_case_id'] ) {

$url = CRM_Utils_System::url( "civicrm/case/activity/view" ,

'reset=1&cid=' . $rows[$rowNum]['civicrm_contact_source_contact_id'] .

'&aid=' . $rows[$rowNum]['civicrm_activity_id'] . '&caseID=' . $rows[$rowNum]['civicrm_case_activity_case_id'],

$this->_absoluteUrl );

} else {

$url = CRM_Utils_System::url( "civicrm/contact/view/activity" ,

'action=view&reset=1&cid=' . $rows[$rowNum]['civicrm_contact_source_contact_id'] .

'&id=' . $rows[$rowNum]['civicrm_activity_id'] . '&atype=' . $value ,

$this->_absoluteUrl );

}

$rows[$rowNum]['civicrm_activity_activity_type_id_link'] = $url;

$rows[$rowNum]['civicrm_activity_activity_type_id_hover'] = $onHoverAct;

}

$entryFound = true;

}

}

if ( array_key_exists('civicrm_activity_status_id', $row ) ) {

if ( $value = $row['civicrm_activity_status_id'] ) {

$rows[$rowNum]['civicrm_activity_status_id'] = $activityStatus[$value];

$entryFound = true;

}

}

if ( !$entryFound ) {

break;

}

}

}

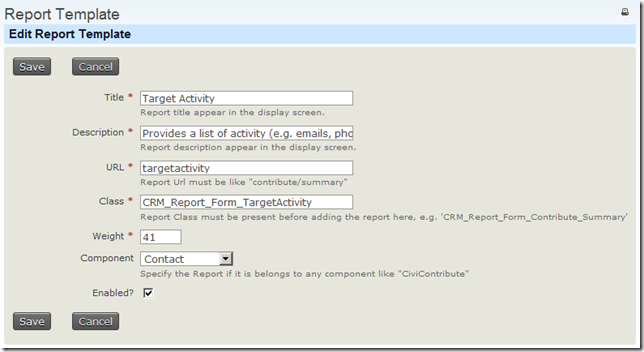

}Name the file with the same name as the template file, and save it in the corresponding location in the custom_php directory. In my case, I saved the file as sitesdefaultfilescivicrmcustom_phpCRMReportFormTargetActivity.php5. Now that the necessary PHP and Template files have been created, the report can be registered using the CiviCRM interface. Go to Administer –> CiviReport –> Manage Templates and click on Register New Report Template. Name and describe your report, and make sure you supply the correct classname as you defined in the PHP file above. I completed the values as below:

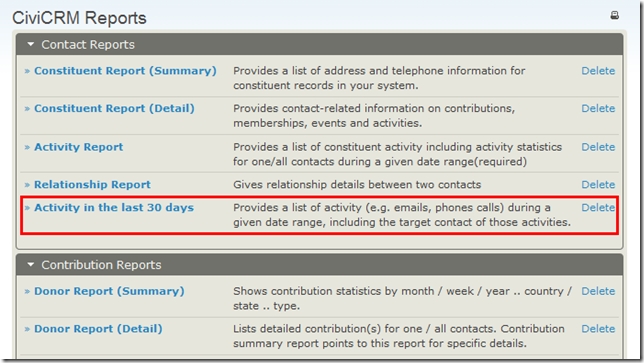

6. Now that the new report template has been registered, you can create individual reports based on that template as usual, by going to Reports –> Create Report from Template. Individual reports based on the template can be added to the navigation dashboard alongside default CiviCRM reports:

Saturday, 7 May 2011

Google Map Maker for the United States

Linux Foundation Collaboration Summit 2011

Andrew Morton will be in a panel discussion on The Linux Kernel: What's Next at 11 AM on Wednesday April 6th.Although the event is invitation only, live video streaming is available for free, so everyone can watch the keynotes and panel discussions. Tune in to the video stream, or if you’ll be attending, introduce yourself after any of the talks!

Michael Rubin, Technical Lead for Google's Kernel Storage team will be presenting a keynote on File systems in the Cloud at 3:30 on Wednesday.

Ian Lance Taylor will be discussing the Go Programming Language at 2:15 on Thursday the 7th as part of the Tools track.

Later on Thursday, Jeremy Allison of the Open Source Programs Office will explain Why Samba Switched to GPLv3 at 4 PM.

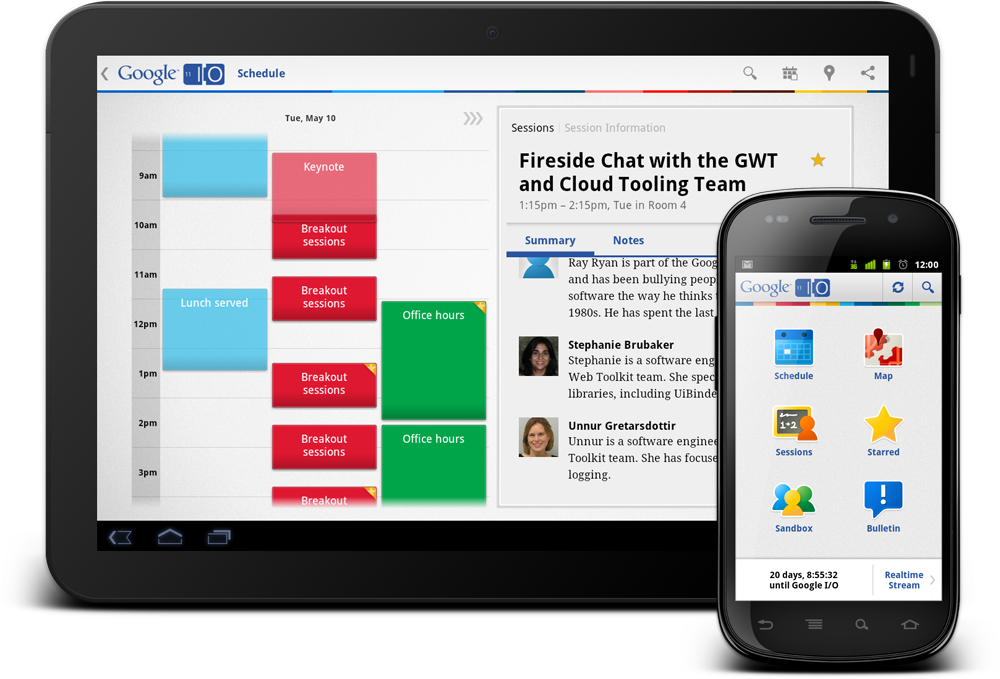

Google I/O goes mobile

For the 2011 edition we redesigned the app to support Android tablets, taking advantage of the extra screen space to offer a realtime activity stream for Google I/O as well as a tablet optimized layout. For the first time, you’ll be able to stay up to date with I/O as it happens, regardless of whether you’re using your computer, tablet, or smartphone.

Our most popular features from last year are making a comeback for the Google I/O 2011 mobile app as well. Browse through session content and schedules, orient yourself with a map, check out the Sandbox, and take notes to get the most out of your experience at the conference.

Speaking of Android, please remember that if you have an old, unlocked Android device, you’ll be able to donate it at the Android for Good booth at I/O to support NGOs and educational institutions in developing countries.

Get the Google I/O 2011 mobile app today by scanning the QR code above or by visiting this link from your computer or your Android device.

Thursday, 5 May 2011

Google Maps: York ME Found; Google gets Letter from Congresswoman

But the story of York is really the story of Maps and Places and it is in many ways bigger than the loss of a single geographic entity. Google has insinuated Maps and Places very deeply into our lives. People and business depend on both products day in and day out to get their jobs done and their sales made. Google Maps and Places are no longer just services but have become critical utilities.

People expect utilities to work and when they don’t, folks expect that they will be repaired in a timely, seamless way.

With York, the process of repair was anything but seamless and the residents decided to involve their local Congresswoman, Chellie Pingree on their behalf. Shortly before the problem was solved, she shot off a letter to Larry Page looking for a solution that got the attention of the local news.

The issue isn’t just that York was lost (although that is a growing social and business problem). Nor even that it was lost again. As Top Contributor EHG (who did a great job on the problem) points out, the real failure is not in the data error per se or even in the repeated loss of the data but in a business process that takes repeated and oft times futile interactions to get a serious problem fixed. This is not the only problem that is handled this way as virtually every issue with Maps and Places, and there are many, have had these process issues since day one. I went so far as to flow chart the process of how a typical problem is solved in Maps and it ain’t pretty!

In Maps and Places Google has long been steadfast in their insistence that new products should be shipped before old products were fixed, They have long insisted that there would be no support on a “free” product. They have long ignored and not fixed problems of both product and more importantly process in the Maps and Places arena.

The question though I have is at what cost to Google?

As Google Places has moved to a dominant position in our lives and a dominant position in the industry, it no longer is flying under the radar. Google Places has about 3 million claimed business listings in the US alone. That means that 20% of all businesses are directly interacting with the product and can see not just the benefits but the costs of doing business with Google.

As Google Places has moved to a dominant position in our lives and a dominant position in the industry, it no longer is flying under the radar. Google Places has about 3 million claimed business listings in the US alone. That means that 20% of all businesses are directly interacting with the product and can see not just the benefits but the costs of doing business with Google.Problems that crop up no longer stay buried in the forums but are getting carried forward by these business folks to the politicians, large regional, national and international news papers. When you lose a town or misplace a highway it is no longer just an angry customer but a reporter or an ambitious politician that is taking up the cause. When you do it repeatedly and leave the questions unanswered Google runs the risk of ever increasing scrutiny and loss of public confidence. Each and every one of these failures ends up tarnishing a great brand just a touch more until…

Let me have one of the affected business owners in York say it as he is just coming to the realization that has been so evident to us in the marketing business for the past 3 or 4 years:

As an early adopter of Google search, as an enthusiastic user/promoter of Gmail, as a lover of Picasa, as a blogger…etc. our disappointment with Google is devastating.

Don’t get me wrong. We are thrilled that this issue seems to be resolved. Thanks again to the Google person who finally solved this.

But when a company that you have endorsed, promoted to friends and family, used with enthusiasm a zillion times, becomes so clumsy that it won’t respond to it’s customers over a period of months, the sense of betrayal is very real.

Every company and every country has it’s early surge of growth and customer focus. Then at some point, it loses its grip and becomes so large and bureaucratic that it becomes ineffective.

I truly hope that this event is an anomaly. I love most of what Google has done. I want to be that enthusiastic booster again. How could that happen?

Google could begin by responding directly via phone call or email to customers with legitimate concerns. Otherwise we are left with the impression that we are just pawns in a huge corporate mosh pit.

A simple human outreach would have solved this problem and saved the world of search thousands of hours of wasted time. Most of all, it would have restored Googles reputation of “doing no evil” as well as being the nimble organization it started out being.

Perhaps some top executives could actually read some of the “reported problems” and set goals for resolution for their engineers. Don”t tell me it isn’t practical. I used to be corporate dude in a major company. All it takes is the right attitude at the top.

Google’s corporate goal is to achieve lower operational costs through automation of their customer service and to do so while creating an ever stronger brand. They have attempted to do so in Maps and Places and have failed. It is possible to do. A company like Amazon demonstrates that with superlative on line products and impeccable processes it can be done.

The solution for Google? The answer is easy and one that Google has articulated to me right along. Fix the products and fix the processes to report problems… make them easier to use (without dumbing them down) and create processes that can solve 99.9% of the problems in an automated way. Why it has taken so long is anyone’s guess. But, and this is a big but, until it does happen Google needs staffing and people to deal with the issues.

Local is widely perceived as the next critical piece for the growth of the internet. When it comes to income opportunities it may even be more important than social. As the local internet gets even more entwined in our lives, Google risks more than a few angry business people with their products, processes and customer service. Google, in failing on these fronts in Maps and Places, is putting the reputation AND success of the whole company on the line.

Wednesday, 4 May 2011

Video Chat on Your Android Phone

You can now video or voice chat with your friends, family and colleagues right from your Android phone, whether they’re on their compatible Android tablet or phone, or using Gmail with Google Talk on their computer. You can make calls over a 3G or 4G data network (if your carrier supports it) or over Wi-Fi.

Google Maps –vs- Bing Maps –vs- OSM

Seeing as I’ve already made comparisons in some of my own recent blog posts concerning the accuracy and completeness of various online mapping providers, the content in Glenn’s article gave me an idea for another comparison…

Firstly, I would find an error somewhere in each of Google Maps, Bing Maps, and Open Street Maps. Then, using the official feedback channels described by Glenn, I would report those errors to the relevant providers. Points would be awarded to each provider based on the ease with which problems could be reported, how long it took for the error to be acknowledged, and how long it took for the error to be corrected on the map.

So, I set off to find some errors:

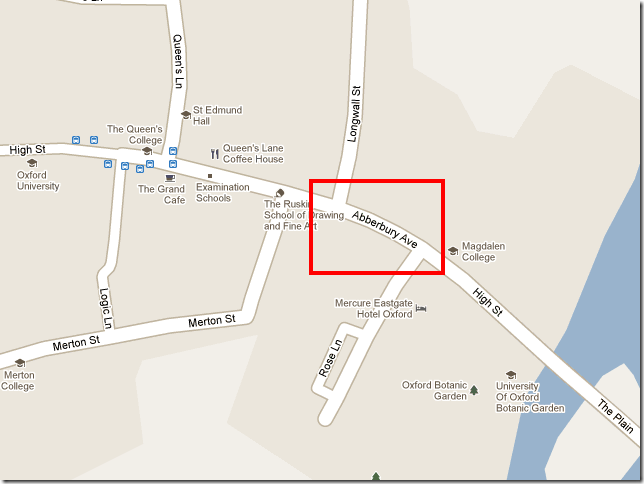

Google Maps Error – “Abberbury Avenue” in the Heart of Oxford

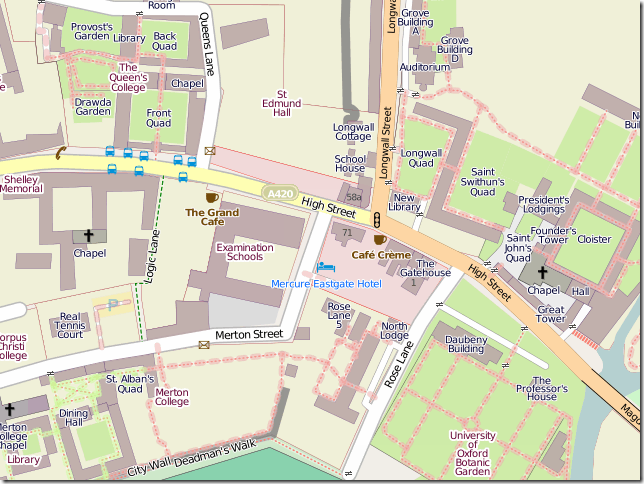

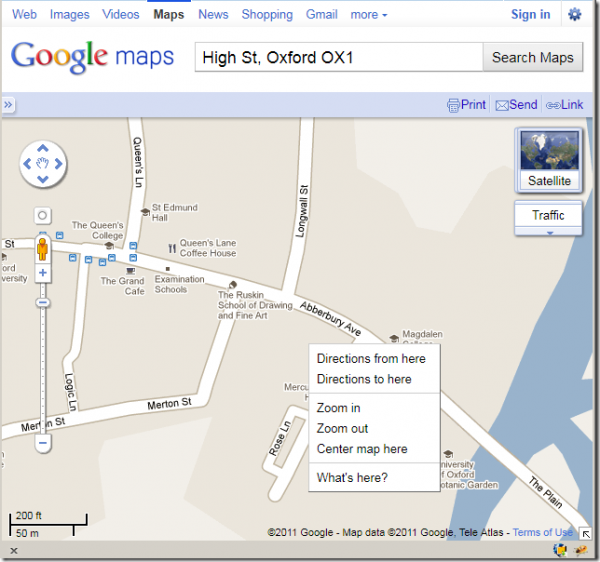

I was born and brought up in Oxford, England. When I returned there recently, I was surprised when driving down Longwall Street in the city centre that my (Google Android-based) car navigation told me to “turn left onto Abberbury Avenue”. In 18 years, I had never heard of Abberbury Avenue, and was certain that the road in front of me was the High Street.On returning home, I checked on the main Google Maps website. Here’s a screenshot of the offending road in question, right next to Magdalen College in the heart of the city:

And here’s how that same section appears correctly in OSM – it’s definitely the High Street (also notice, once again, at the awesome level of detail in the OSM map – the college quads being individually and correctly labelled etc.):

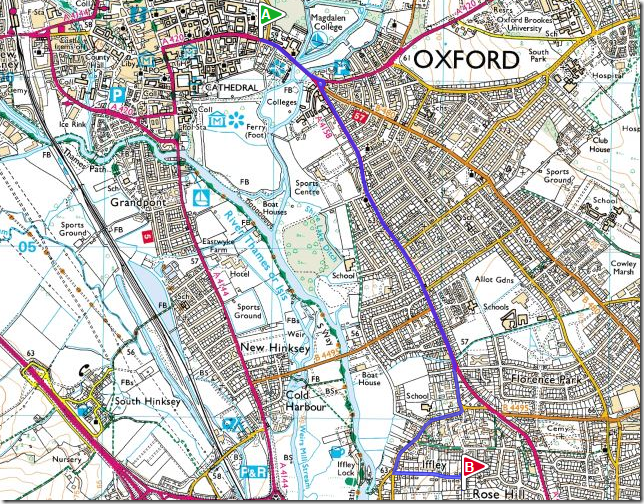

There is an Abberbury Avenue in Oxford, incidentally, but it is found several miles away, near Rose Hill. The journey from Google’s “Abberbury Avenue” to the true location is shown as follows:

So, I set about following Glenn’s instructions to report the error. And then I stumbled across my second problem. The steps described to report a problem in Google Maps, which are also stated by Google on their own help pages here , say:

You can access Report a Problem in a couple of places:

- Right click on the map and select “Report a Problem”

- Click the “Report a Problem” link at the bottom right of the map

That’s right – Right-clicking on the map does not give an option for “Report a Problem”, and neither is there a “Report a Problem” link at the bottom right of the map. From a quick search of the internet, it seems that the ability to report errors on Google Maps is limited to users in the USA, so that’s points lost immediately for Google…

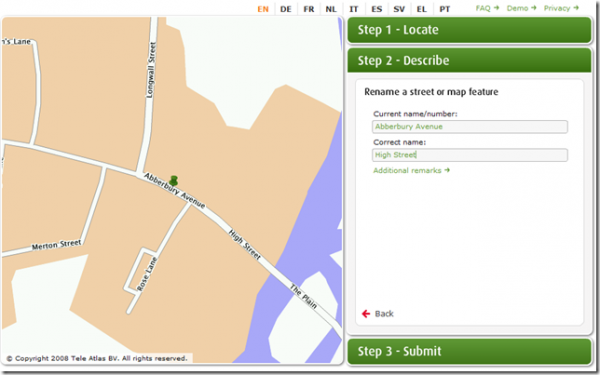

So how exactly am I meant to report this problem? Fortunately, there is a copyright notice at the bottom of the map crediting Tele Atlas as the source for Google’s map data, and Glenn’s article also gives instructions on how to feedback directly to them. Fortunately, this is a very user-friendly step-by-step process, and I completed the details of the error and submitted it as shown below:

I was given a tracking reference number and a link to track the progress of the error online. In theory, if the error is corrected in Tele Atlas’ own dataset will eventually feed back into Google Maps…. let’s see if it does.

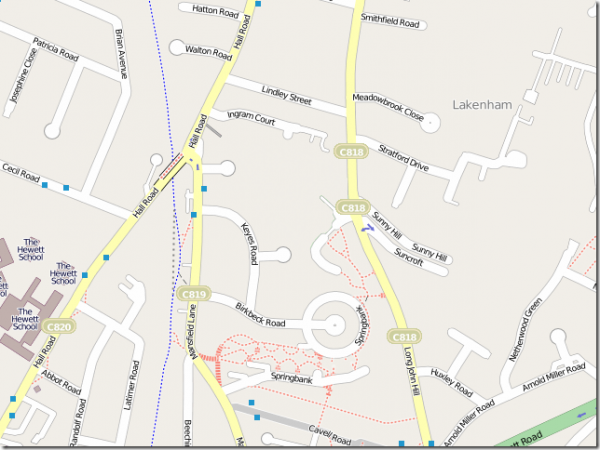

Bing Maps – Driving down a Pedestrian-only way

The nature of the error I found in the Bing Maps website was slightly different. I couldn’t find any labelling errors on the map, but when trying to use the directions feature to plot adriving route between Norvic Drive and Peckover Road in Norwich, I noticed an interesting instruction:2. Turn right onto Chalfont Walk

This immediately alerted my attention – pretty much any road named Chalfont Walk is, well, designed for walking, not for driving. Sure enough, as shown in Open Street Maps, it’s pedestrian access only:

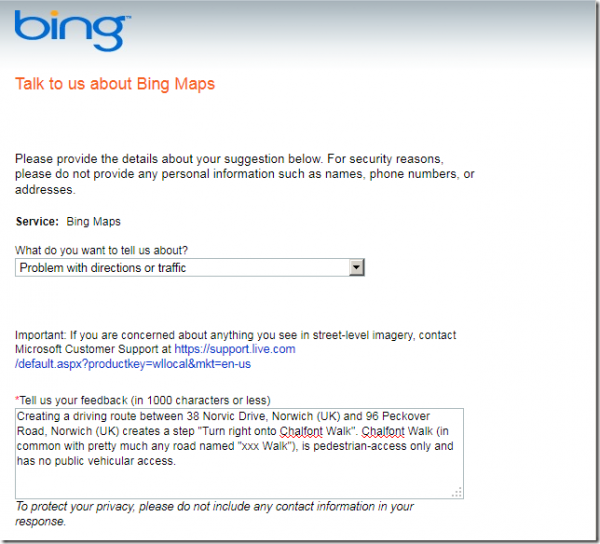

Fortunately, the Bing Maps website does have a feedback link at the bottom right corner, with different options to report problems with e.g. imagery, points of interest on the map, directions, etc. I filled in the feedback form with details of the error as follows:

I was given a “Thank you for taking the time to tell us about your experience” message, but no tracking reference and I didn’t have the opportunity to provide an email address, so Bing Maps also loses points here. I guess I’ll just have to keep checking back to see when (if) the problem is solved….

Open Street Maps

As noted in a previous post of mine, OSM has incredibly high quality data – higher, I believe, than that of the Ordnance Survey. Finding an error on the OSM map was therefore going to prove tricky. In fact, the only “error” I could find was not really an error – more an omission (and a pretty minor one at that). Jubilee Park, a park near my house in Norwich, wasn’t shown on the map:

So, you know what? I just logged into http://osm.org and, using the online map editor, added the missing feature. 5 minutes later, and here’s the correct OSM map:

So, that’s full marks for OSM. But who will come in second place out of Bing and Google? I’ll let you know as soon as I hear anything from either of them.

Tuesday, 3 May 2011

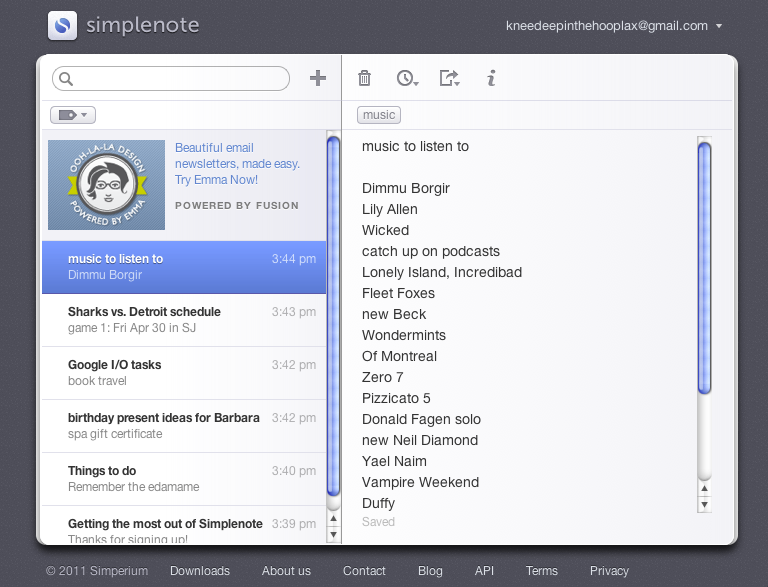

Google App Engine for Simplenote

Google App Engine is at the heart of it. We made a decision early on to use App Engine so we wouldn't have to worry about scaling, or deploying more servers, or systems administration of any kind. Being able to instantly deploy new versions of code has allowed us to iterate quickly based on feedback we get from our users, and easily test new features in our web app, like the newly added Markdown support.

We consider our syncing capabilities to be core features of Simplenote. They are, in and of themselves, largely responsible for attracting and retaining many of our users. Our goal is to give other developers access to great syncing, too. The next version of our backend is named after our company, Simperium. As a general-purpose, realtime syncing platform intended for third-party use, Simperium's architecture is much more expansive than the Simplenote backend. Yet App Engine still plays a key role. It powers the Simplenote API that is used by dozens of great third-party apps like Notational Velocity. And it continues to power auxiliary systems, like processing payments with Stripe, while bridging effectively with externally hosted systems, like our solution for storing notes as files in the wonderful Dropbox.

We suspected we might outgrow App Engine, but we haven't. Instead, our use of it has evolved along with our needs. Code we wrote for App Engine a year ago continues to hum along today, providing important functionality even as new systems spring up around it.

In fact, we still come up with entirely new ways to use App Engine as well. Just last week we launched an internal system that uses APIs from Twitter, Amazon Web Services, Assistly, and HipChat to pump important business data into our private chat rooms. This was a breeze to write and deploy using App Engine. Such is the mark of a versatile and trustworthy tool: it's the first thing you reach for in your tool belt.