Thursday, 29 December 2011

The Kindle Fire

Today’s blog post comes to us from Greg Bayer of Pulse, a popular news reading application for iPhone, iPad and Android devices. Pulse has used Google App Engine as a core part of their infrastructure for over a year and they recently celebrated a significant launch. We hope you find their experiences and tips on scaling useful.

As part of the much anticipated Kindle Fire launch, Pulse was announced as one of the only preloaded apps. When you first un-box the Fire, Pulse will be there waiting for you on the home row, next to Facebook and IMDB!

Scale

The Kindle Fire is projected to sell over five million units this quarter alone. This means that those of us who work on backend infrastructure at Pulse have had to prepare for nearly doubling our user-base in a very short period. We also need to be ready for spikes in load due to press events and the holiday season.

Architecture

As I’ve discussed previously on the Pulse Engineering Blog, Pulse’s infrastructure has been designed with scalability in mind from the beginning. We’ve built our web site and client APIs on top of Google App Engine, which has allowed us to grow steadily from 10s to many 1000s of requests per second, without needing to re-architect our systems.

While restrictive in some ways, we’ve found App Engine’s frontend serving instances (running Python in our case) to be extremely scalable, with minimal operational support from our team. We’ve also found the datastore, memcache, and task queue facilities to be equally scalable.

Pulse’s backend infrastructure provides many critical services to our native applications and web site. For example, we cache and serve optimized feed and image data for each source in our catalog. This allows us to minimize latency and data transfer and is especially important to providing an exceptional user experience on limited mobile connections. Providing this service for millions of users requires us to serve 100Ms of requests per day. As with any well designed App Engine app, the vast majority of these requests are served out of memcache and never hit the datastore. Another useful technique we use is to set public cache control headers wherever possible, to allow Google’s edge cache (shown as cached requests on the graph below) and ISP / mobile carrier caches to serve unchanged content directly to users.

As part of the much anticipated Kindle Fire launch, Pulse was announced as one of the only preloaded apps. When you first un-box the Fire, Pulse will be there waiting for you on the home row, next to Facebook and IMDB!

Scale

The Kindle Fire is projected to sell over five million units this quarter alone. This means that those of us who work on backend infrastructure at Pulse have had to prepare for nearly doubling our user-base in a very short period. We also need to be ready for spikes in load due to press events and the holiday season.

Architecture

As I’ve discussed previously on the Pulse Engineering Blog, Pulse’s infrastructure has been designed with scalability in mind from the beginning. We’ve built our web site and client APIs on top of Google App Engine, which has allowed us to grow steadily from 10s to many 1000s of requests per second, without needing to re-architect our systems.

While restrictive in some ways, we’ve found App Engine’s frontend serving instances (running Python in our case) to be extremely scalable, with minimal operational support from our team. We’ve also found the datastore, memcache, and task queue facilities to be equally scalable.

Pulse’s backend infrastructure provides many critical services to our native applications and web site. For example, we cache and serve optimized feed and image data for each source in our catalog. This allows us to minimize latency and data transfer and is especially important to providing an exceptional user experience on limited mobile connections. Providing this service for millions of users requires us to serve 100Ms of requests per day. As with any well designed App Engine app, the vast majority of these requests are served out of memcache and never hit the datastore. Another useful technique we use is to set public cache control headers wherever possible, to allow Google’s edge cache (shown as cached requests on the graph below) and ISP / mobile carrier caches to serve unchanged content directly to users.

Costs

Based on App Engine’s projected billing statements leading up to the recent pricing changes, we were concerned that our costs might increase significantly. To prepare for these changes and the expected additional load from Kindle Fire users, we invested some time in diagnosing and reducing these costs. In most cases, the increases turned out to be an indicator of inefficiencies in our code and/or in the App Engine scheduler. With a little optimization, we have reduced these costs dramatically.

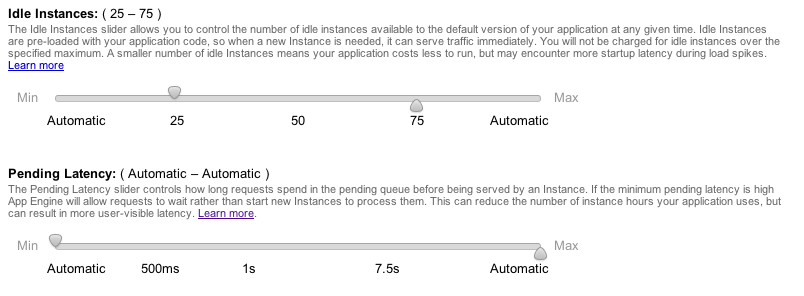

The new tuning sliders for the scheduler make it possible to rein in overly aggressive instance allocation. In the old pricing structure, idle instance time wasn’t charged for at all, so these inefficiencies were usually ignored. Now App Engine charges for all instance time by default. However, any time App Engine runs more idle instances than you’ve allowed, those hours are free. This acts as a hint to the scheduler, helping it reduce unneeded idle instances. By doing some testing to find the optimal cost vs spike latency tolerance and setting the sliders to those levels, we were able to reduce our frontend instance costs to near original levels. Our heavy usage of memcache (which is still free!) also helps keep our instance hours down.

Costs

Based on App Engine’s projected billing statements leading up to the recent pricing changes, we were concerned that our costs might increase significantly. To prepare for these changes and the expected additional load from Kindle Fire users, we invested some time in diagnosing and reducing these costs. In most cases, the increases turned out to be an indicator of inefficiencies in our code and/or in the App Engine scheduler. With a little optimization, we have reduced these costs dramatically.

The new tuning sliders for the scheduler make it possible to rein in overly aggressive instance allocation. In the old pricing structure, idle instance time wasn’t charged for at all, so these inefficiencies were usually ignored. Now App Engine charges for all instance time by default. However, any time App Engine runs more idle instances than you’ve allowed, those hours are free. This acts as a hint to the scheduler, helping it reduce unneeded idle instances. By doing some testing to find the optimal cost vs spike latency tolerance and setting the sliders to those levels, we were able to reduce our frontend instance costs to near original levels. Our heavy usage of memcache (which is still free!) also helps keep our instance hours down.

Since datastore operations used to be charged under the umbrella of CPU hours, it was difficult to know the cost of these operations under the old pricing structure. This meant it was easy to miss application inefficiencies, especially for write-heavy workloads where additional indexes can have a multiplicative effect on costs. In our case, the new datastore write operations metric led us to notice some inefficiencies in our design and a tendency to overuse indexes. We are now working to minimize the number of indexes our queries rely on, and this has started to reduce our write costs.

Preparing for the Kindle Fire Launch

We took a few additional steps to prepare for the expected load increase and spikes associated with the Fire’s launch. First, we contacted App Engine’s support team to warn them of the expected increase. This is recommended for any app at or near 10,000 requests per second (to make sure your application is correctly provisioned). We also signed up for a Premier account which gets us additional support and simpler billing.

Architecturally, we decided to split our load across three primary applications, each serving different use cases. While this makes it harder to access data across these applications, those same boundaries serve to isolate potential load-related problems and make tuning simpler. In our case, we were able to divide certain parts of our infrastructure, where cross application data access was less important and load would be significant. Until App Engine provides more visibility into and control of memcache eviction policies, this approach also helps prevent lower priority data from evicting critical data.

I’m hopeful that in the near future such division of services will not be required. Individually tunable load isolation zones and memcache controls would certainly make it a lot more appealing to have everything in a single application. Until then, this technique works quite well, and helps to simplify how we think about scaling.

Since datastore operations used to be charged under the umbrella of CPU hours, it was difficult to know the cost of these operations under the old pricing structure. This meant it was easy to miss application inefficiencies, especially for write-heavy workloads where additional indexes can have a multiplicative effect on costs. In our case, the new datastore write operations metric led us to notice some inefficiencies in our design and a tendency to overuse indexes. We are now working to minimize the number of indexes our queries rely on, and this has started to reduce our write costs.

Preparing for the Kindle Fire Launch

We took a few additional steps to prepare for the expected load increase and spikes associated with the Fire’s launch. First, we contacted App Engine’s support team to warn them of the expected increase. This is recommended for any app at or near 10,000 requests per second (to make sure your application is correctly provisioned). We also signed up for a Premier account which gets us additional support and simpler billing.

Architecturally, we decided to split our load across three primary applications, each serving different use cases. While this makes it harder to access data across these applications, those same boundaries serve to isolate potential load-related problems and make tuning simpler. In our case, we were able to divide certain parts of our infrastructure, where cross application data access was less important and load would be significant. Until App Engine provides more visibility into and control of memcache eviction policies, this approach also helps prevent lower priority data from evicting critical data.

I’m hopeful that in the near future such division of services will not be required. Individually tunable load isolation zones and memcache controls would certainly make it a lot more appealing to have everything in a single application. Until then, this technique works quite well, and helps to simplify how we think about scaling.

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment